The following program calculates the minimum point of a multi-variable function using the Conjugate Gradient (Fletcher-Reeves) method.

Click here to download a ZIP file containing the project files for this program.

The program prompts you to either use the predefined default input values or to enter the following :

1. The coordinates of the minimum value.

2. The minimum function value.

3. The maximum number of iterations

In case you choose the default input values, the program displays these values and proceeds to find the optimum point. In the case you select being prompted, the program displays the name of each input variable along with its default value. You can then either enter a new value or simply press Enter to use the default value. This approach allows you to quickly and efficiently change only a few input values if you so desire.

The program displays the following final results:

1. The coordinates of the minimum value.

2. The minimum function value.

3. The number of iterations

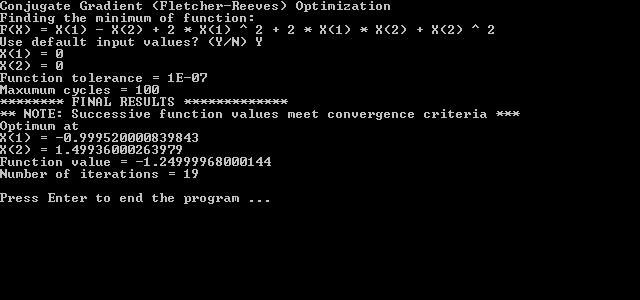

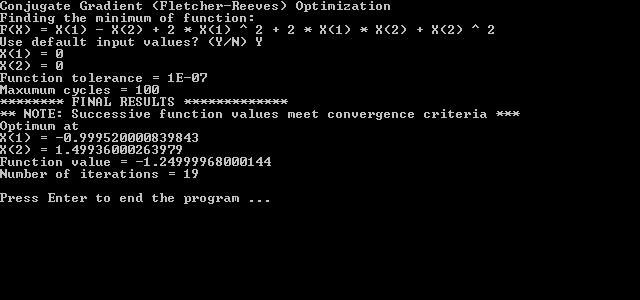

The current code finds the minimum for the following function:

f(x1,x2) = x1 - x2 + 2 * x1 ^ 2 + 2 * x1 * x2 + x2 ^ 2

Using an initial value of 0 for each variable, a function tolerance of 1e-7, and a maximum number of 100 search cycles. Here is the sample console screen:

Here is the listing for the main module. The module contains several test functions:

using System.Diagnostics;

using System.Data;

using System.Collections;

using Microsoft.VisualBasic;

using System.Collections.Generic;

using System;

namespace Optim_ConjugateGradient1

{

sealed class Module1

{

static public void Main()

{

int nNumVars = 2;

double[] fX = new double[] { 0, 0 };

double[] fParam = new double[] { 0, 0 };

int nIter = 0;

int nMaxIter = 100;

double fEpsFx = 0.0000001;

int i;

double fBestF;

string sAnswer;

string sErrorMsg = "";

CConjugateGradient1 oOpt;

MyFxDelegate MyFx = new MyFxDelegate( Fx3);

SayFxDelegate SayFx = new SayFxDelegate( SayFx3);

oOpt = new CConjugateGradient1();

Console.WriteLine("Conjugate Gradient (Fletcher-Reeves) Optimization");

Console.WriteLine("Finding the minimum of function:");

Console.WriteLine(SayFx());

Console.Write("Use default input values? (Y/N) ");

sAnswer = Console.ReadLine();

if (sAnswer.ToUpper() == "Y")

{

for (i = 0; i < nNumVars; i++)

{

Console.WriteLine("X({0}) = {1}", i + 1, fX[i]);

}

Console.WriteLine("Function tolerance = {0}", fEpsFx);

Console.WriteLine("Maxumum cycles = {0}", nMaxIter);

}

else

{

for (i = 0; i < nNumVars; i++)

{

fX[i] = GetIndexedDblInput("X", i + 1, fX[i]);

}

fEpsFx = GetDblInput("Function tolerance", fEpsFx);

nMaxIter = (int) GetDblInput("Maxumum cycles", nMaxIter);

}

Console.WriteLine("******** FINAL RESULTS *************");

fBestF = oOpt.CalcOptim(nNumVars, ref fX, ref fParam, fEpsFx, nMaxIter, ref nIter, ref sErrorMsg, MyFx);

if (sErrorMsg.Length > 0)

{

Console.WriteLine("** NOTE: {0} ***", sErrorMsg);

}

Console.WriteLine("Optimum at");

for (i = 0; i < nNumVars; i++)

{

Console.WriteLine("X({0}) = {1}", i + 1, fX[i]);

}

Console.WriteLine("Function value = {0}", fBestF);

Console.WriteLine("Number of iterations = {0}", nIter);

Console.WriteLine();

Console.Write("Press Enter to end the program ...");

Console.ReadLine();

}

static public double GetDblInput(string sPrompt, double fDefInput)

{

string sInput;

Console.Write("{0}? ({1}): ", sPrompt, fDefInput);

sInput = Console.ReadLine();

if (sInput.Trim(null).Length > 0)

{

return double.Parse(sInput);

}

else

{

return fDefInput;

}

}

static public int GetIntInput(string sPrompt, int nDefInput)

{

string sInput;

Console.Write("{0}? ({1}): ", sPrompt, nDefInput);

sInput = Console.ReadLine();

if (sInput.Trim(null).Length > 0)

{

return (int) double.Parse(sInput);

}

else

{

return nDefInput;

}

}

static public double GetIndexedDblInput(string sPrompt, int nIndex, double fDefInput)

{

string sInput;

Console.Write("{0}({1})? ({2}): ", sPrompt, nIndex, fDefInput);

sInput = Console.ReadLine();

if (sInput.Trim(null).Length > 0)

{

return double.Parse(sInput);

}

else

{

return fDefInput;

}

}

static public int GetIndexedIntInput(string sPrompt, int nIndex, int nDefInput)

{

string sInput;

Console.Write("{0}({1})? ({2}): ", sPrompt, nIndex, nDefInput);

sInput = Console.ReadLine();

if (sInput.Trim(null).Length > 0)

{

return (int) double.Parse(sInput);

}

else

{

return nDefInput;

}

}

static public string SayFx1()

{

return "F(X) = 10 + (X(1) - 2) ^ 2 + (X(2) + 5) ^ 2";

}

static public double Fx1(int N, ref double[] X, ref double[] fParam)

{

return 10 + Math.Pow(X[0] - 2, 2) + Math.Pow(X[1] + 5, 2);

}

static public string SayFx2()

{

return "F(X) = 100 * (X(1) - X(2) ^ 2) ^ 2 + (X(2) - 1) ^ 2";

}

static public double Fx2(int N, ref double[] X, ref double[] fParam)

{

return Math.Pow(100 * (X[0] - X[1] * X[1]), 2) + Math.Pow((X[1] - 1), 2);

}

static public string SayFx3()

{

return "F(X) = X(1) - X(2) + 2 * X(1) ^ 2 + 2 * X(1) * X(2) + X(2) ^ 2";

}

static public double Fx3(int N, ref double[] X, ref double[] fParam)

{

return X[0] - X[1] + 2 * X[0] * X[0] + 2 * X[0] * X[1] + X[1] * X[1];

}

}

}

Notice that the user-defined functions have accompanying helper functions to display the mathematical expression of the function being optimized. For example, function Fx1 has the helper function SayFx1 to list the function optimized in Fx1. Please observe the following rules::

The program uses the following class to optimize the objective function:

using System.Diagnostics;

using System.Data;

using System.Collections;

using Microsoft.VisualBasic;

using System.Collections.Generic;

using System;

namespace Optim_ConjugateGradient1

{

public delegate double MyFxDelegate(int nNumVars, ref double[] fX, ref double[] fParam);

public delegate string SayFxDelegate();

public class CConjugateGradient1

{

MyFxDelegate m_MyFx;

public double MyFxEx(int nNumVars, ref double[] fX, ref double[] fParam, ref double[] fDeltaX, double fLambda)

{

int i;

double[] fXX = new double[nNumVars];

for (i = 0; i < nNumVars; i++)

{

fXX[i] = fX[i] + fLambda * fDeltaX[i];

}

return m_MyFx(nNumVars, ref fXX, ref fParam);

}

private void GetGradients(int nNumVars, ref double[] fX, ref double[] fParam, ref double[] fDeriv, ref double fDerivNorm)

{

int i;

double fXX, H, Fp, Fm;

fDerivNorm = 0;

for (i = 0; i < nNumVars; i++)

{

fXX = fX[i];

H = 0.01 *(1 + Math.Abs(fXX));

fX[i] = fXX + H;

Fp = m_MyFx(nNumVars, ref fX, ref fParam);

fX[i] = fXX - H;

Fm = m_MyFx(nNumVars, ref fX, ref fParam);

fX[i] = fXX;

fDeriv[i] = (Fp - Fm) / 2 / H;

fDerivNorm += Math.Pow(fDeriv[i], 2);

}

fDerivNorm = Math.Sqrt(fDerivNorm);

}

public bool LinSearch_DirectSearch(int nNumVars, ref double[] fX, ref double[] fParam, ref double fLambda, ref double[] fDeltaX, double InitStep, double MinStep)

{

double F1, F2;

F1 = MyFxEx(nNumVars, ref fX, ref fParam, ref fDeltaX, fLambda);

do

{

F2 = MyFxEx(nNumVars, ref fX, ref fParam, ref fDeltaX, fLambda + InitStep);

if (F2 < F1)

{

F1 = F2;

fLambda += InitStep;

}

else

{

F2 = MyFxEx(nNumVars, ref fX, ref fParam, ref fDeltaX, fLambda - InitStep);

if (F2 < F1)

{

F1 = F2;

fLambda -= InitStep;

}

else

{

// reduce search step size

InitStep /= 10;

}

}

} while (!(InitStep < MinStep));

return true;

}

public double CalcOptim(int nNumVars, ref double[] fX, ref double[] fParam, double fEpsFx, int nMaxIter, ref int nIter, ref string sErrorMsg, MyFxDelegate MyFx)

{

int i;

double[] fDeriv = new double[nNumVars];

double[] fDerivOld = new double[nNumVars];

double F, fDFNormOld, fLambda, fLastF, fDFNorm = 0;

m_MyFx = MyFx;

// calculate and function value at initial point

fLastF = MyFx(nNumVars, ref fX, ref fParam);

GetGradients(nNumVars, ref fX, ref fParam, ref fDeriv, ref fDFNorm);

fLambda = 0.1;

if (LinSearch_DirectSearch(nNumVars, ref fX, ref fParam, ref fLambda, ref fDeriv, 0.1, 0.000001))

{

for (i = 0; i < nNumVars; i++)

{

fX[i] += fLambda * fDeriv[i];

}

}

else

{

sErrorMsg = "Failed linear search";

return fLastF;

}

nIter = 1;

do

{

nIter++;

if (nIter > nMaxIter)

{

sErrorMsg = "Reached maximum iterations limit";

break;

}

fDFNormOld = fDFNorm;

for (i = 0; i < nNumVars; i++)

{

fDerivOld[i] = fDeriv[i]; // save old gradient

}

GetGradients(nNumVars, ref fX, ref fParam, ref fDeriv, ref fDFNorm);

for (i = 0; i < nNumVars; i++)

{

fDeriv[i] = Math.Pow((fDFNorm / fDFNormOld), 2)* fDerivOld[i] - fDeriv[i];

}

if (fDFNorm <= fEpsFx)

{

sErrorMsg = "Gradient norm meets convergence criteria";

break;

}

// For i = 0 To nNumVars - 1

// fDeriv(i) = -fDeriv(i) / fDFNorm

// Next i

fLambda = 0;

// If LinSearch_Newton(fX, nNumVars, fLambda, fDeriv, 0.0001, 100) Then

if (LinSearch_DirectSearch(nNumVars, ref fX, ref fParam, ref fLambda, ref fDeriv, 0.1, 0.000001))

{

for (i = 0; i < nNumVars; i++)

{

fX[i] += fLambda * fDeriv[i];

}

F = MyFx(nNumVars, ref fX, ref fParam);

if (Math.Abs(F - fLastF) < fEpsFx)

{

sErrorMsg = "Successive function values meet convergence criteria";

break;

}

else

{

fLastF = F;

}

}

else

{

sErrorMsg = "Failed linear search";

break;

}

} while (true);

return fLastF;

}

}

}

Copyright (c) Namir Shammas. All rights reserved.